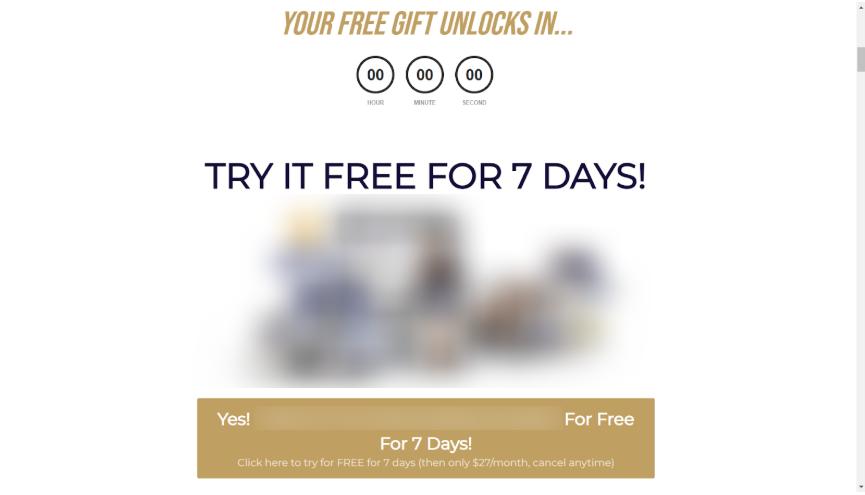

We want to test whether the current pricing for our client’s community membership is well-aligned with their audience’s budget, and identify the pricing strategy that maximizes revenue. The existing offer is $27 per month with a one-week free trial.

Our goal is to test alternative pricing options to see which scenario leads to the highest overall income, while also understanding audience preferences and satisfaction levels.

In addition, we want to evaluate the role of the free trial itself: Does it help increase revenue by encouraging sign-ups, or does it reduce perceived commitment and lead to higher cancellation rates?

In the end, we need to measure two things:

- Whether offering a free trial helps or hurts conversions

- How different price points affect conversions

Hypothesis

We believe our current plan which is $27 per month with a 7-day free trial, can be redesigned to better suit our audience and, more importantly, have a stronger positive impact on our revenue.

Test Design

The original price is $27 with a 7-day free trial. To test both price and trial impact, we designed four variants of the thank-you page:

Control: $27 with 7-day free trial

Test Group 1: $27 with no free trial

Test Group 2: $17 with 7-day free trial

Test Group 3: $17 with no free trial

Results

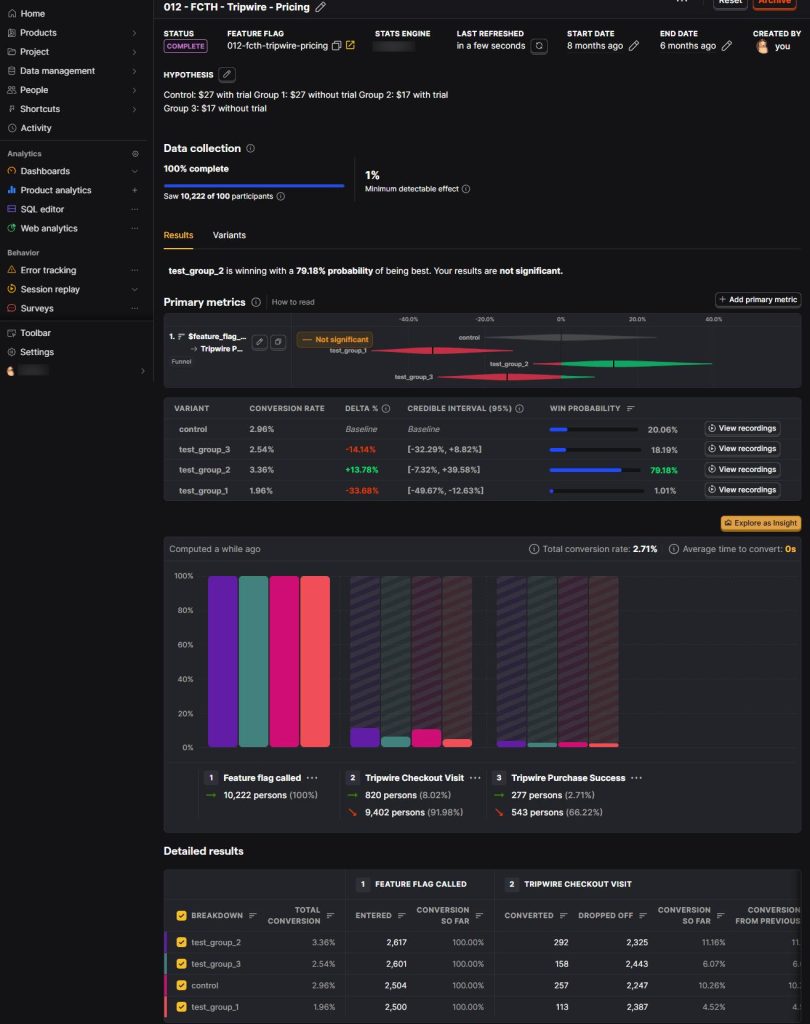

The experiment ran from Friday, October 25, 2024, for ten days, with 10,222 users participating.

Test Group 2 ($17 with a 7-day free trial) performed best, with a 79.18% probability of being the top option.

Conclusion

Test Group 2 clearly had the highest conversion rate because it was cheaper and offered a one-week free trial. At first glance, it seemed like the obvious winner, and one of our coworkers even ended the test early and chose Group 2 as the permanent option 🙂 But that was actually a mistake.

Our main and only metric is how much money we make (total income) from each test group. This metric doesn’t reveal itself in the first weeks or even the first month. We can’t stop the test early because in the first weeks, the groups with the free trial generated no revenue at all. That’s the key reason why you cannot conclude results based on short-term data.

Another factor is that this offer is a subscription, which means we’re dealing with recurring revenue. To measure it properly, you need to review financial data over at least a couple of months.

After running the test, we collected behavioral data in PostHog, but to see actual revenue we reviewed financial data from ThriveCart, which PostHog can’t track. That revealed an important insight:

After two months, users who signed up with the free trial had a much higher churn rate (the percentage of users who stop using your service over time) than those who joined without a trial. In other words, after this period, there were more paying subscribers remaining in the groups that didn’t offer a free trial.

Interestingly, among the groups without the trial, while the $17 version converted better than the $27 version, the $10 price difference was enough to offset the lower conversion rate. As a result, total revenue from Test Group 1 was higher than from Test Group 3.

Since our main metric is total income per test group, Test Group 1 was the true winner.

We continued monitoring for another month, and the results confirmed that Test Group 1 remained the winner. It also showed us that our customers are comfortable paying $27.

Why This Happened

This result was so interesting that we decided to investigate further. It reminded us of a Dan Gilbert TED talk about human decision-making, which offers a good explanation for what we observed.

In the research he describes, participants were asked to choose between two paintings to take home. One group was told their choice was final; they couldn’t change their mind. The other group was given one or two weeks to change their selection, similar to offering a trial period.

After one or two months, those who couldn’t change their mind were happier with their choice than those who could.

That’s exactly what happened in our experiment. By offering a free trial, we gave people the psychological option to change their mind later, which led to higher churn. Those without the trial were more committed and more satisfied, staying subscribed longer.

This test shows us that from one case to another, our approach must adapt and align with the specific context to achieve the best performance.

Check out the guides below to learn how to set up A/B tests on platforms like ClickFunnels and Shopify. Then, read this PostHog article to understand how to create an experiment effectively.